Googlum is brokum

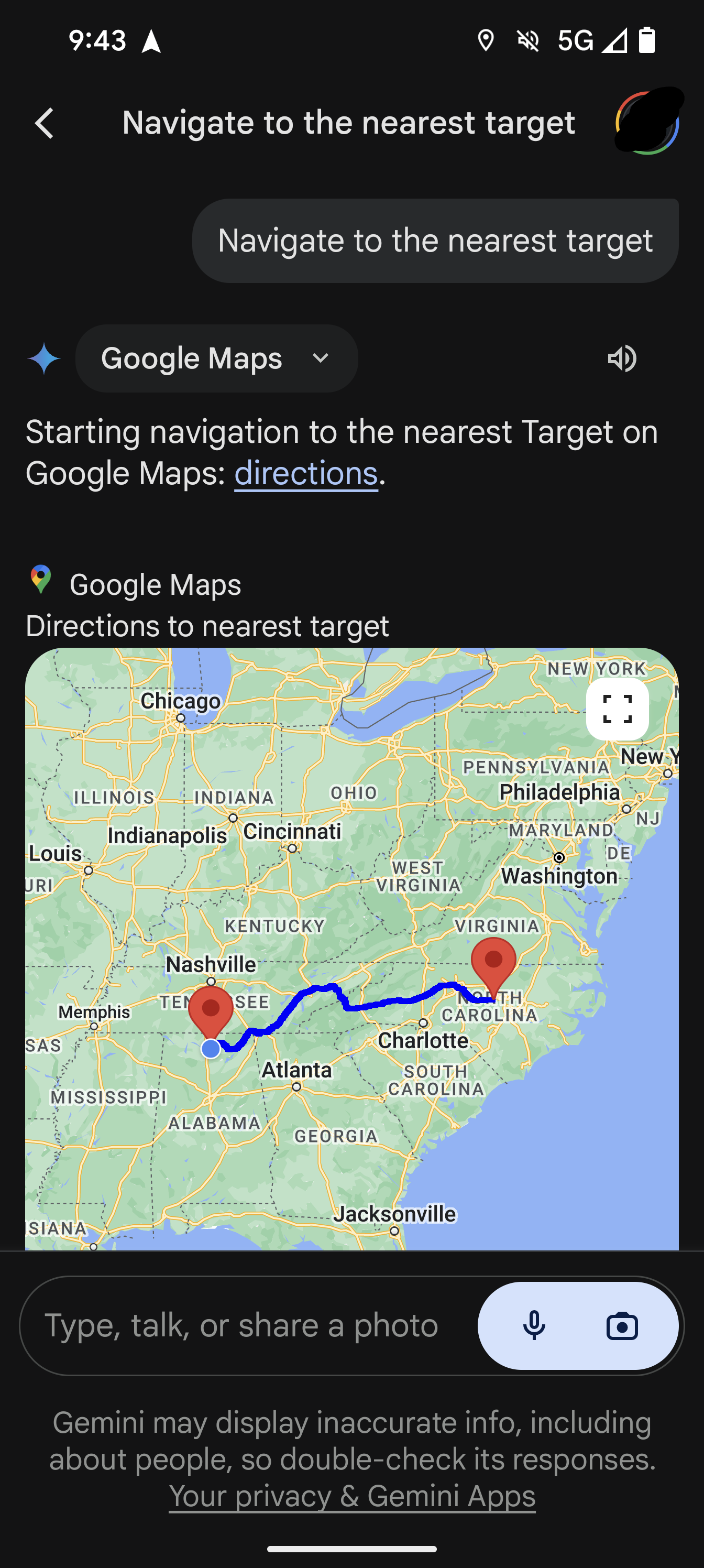

I just tried to have Gemini navigate to the nearest Starbucks and the POS found one 8hrs and 38mins away.

Absolute trash.

Just tried it with Target and again, it’s sending me to Raleigh, North Carolina.

It seems to think you need to leave Alabama but aren’t ready for a state as tolerable as Georgia

I would totally leave if the “salary to cost of living” ratio wasn’t so damn good.

I’d move to Germany or the Netherlands or Sweden or Norway so fast if I could afford it.

that leads me to believe it thinks you are in North Carolina. have you allowed location to Gemini? Are you on a VPN?

No VPN, it all has proper location access. I even tried it with a local restaurant that I didn’t think was a chain, and it found one in Tennessee. I’m like 10 minutes away from where I told it to go.

Despite that, it delivers its results with much applum!

Quality pum

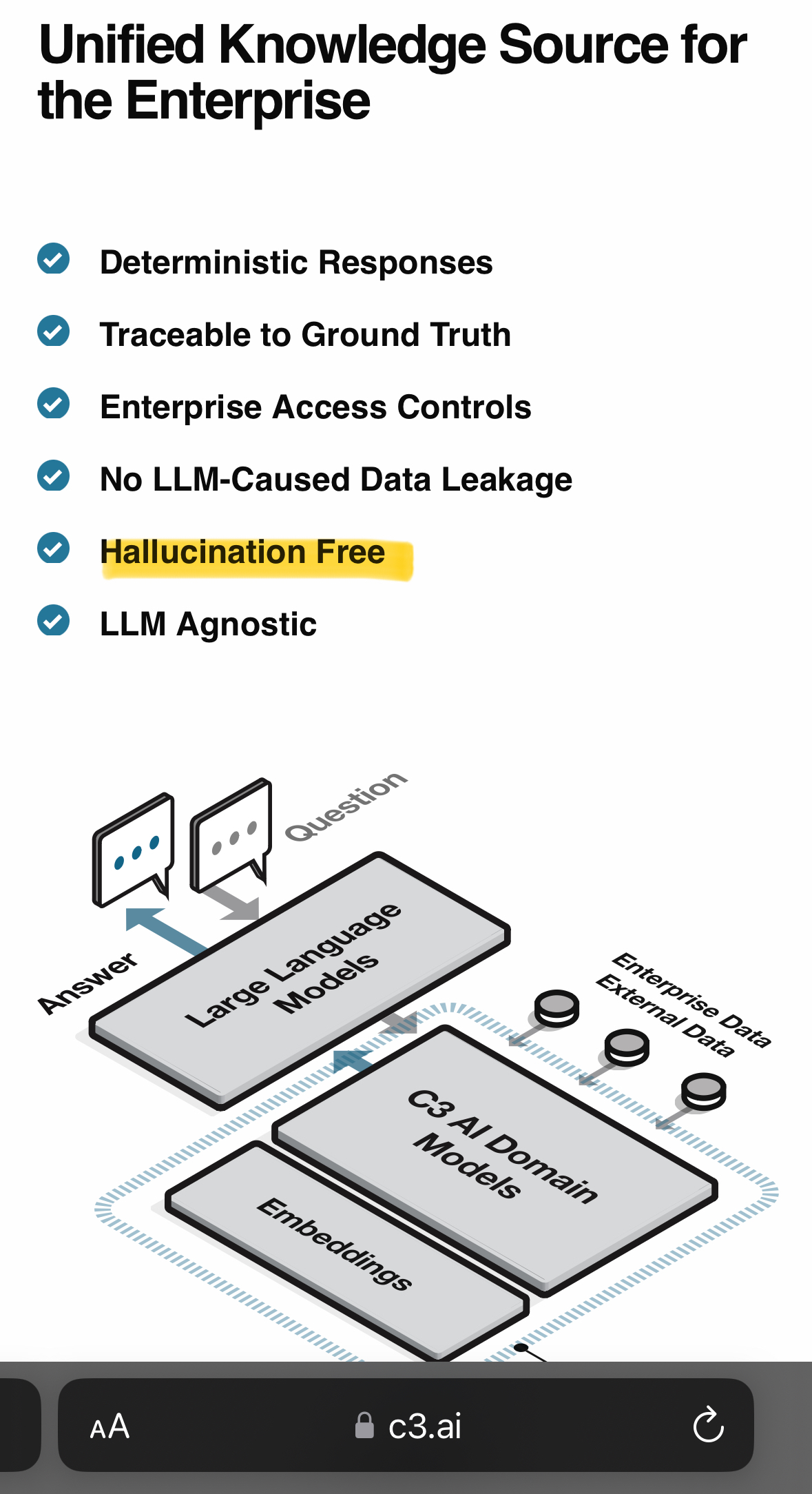

Some “AI” LLMs resort to light hallucinations. And then ones like this straight-up gaslight you!

Factual accuracy in LLMs is “an area of active research”, i.e. they haven’t the foggiest how to make them stop spouting nonsense.

duckduckgo figured this out quite a while ago: just fucking summarize wikipedia articles and link to the precise section it lifted text from

We can’t fleece investors with that though, needs more “AI”.

Because accuracy requires that you make a reasonable distinction between truth and fiction, and that requires context, meaning, understanding. Hell, full humans aren’t that great at this task. This isn’t a small problem, I don’t think you solve it without creating AGI.

MFer accidentally got “plum” right and didn’t even know it…

Coconutum

You did WHAT to em?

I barely know 'em!

Ok, let me try listing words that ends in “um” that could be (even tangentially) considered food.

- Plum

- Gum

- Chum

- Rum

- Alum

- Rum, again

- Sea People

I think that’s all of them.

Your mum.

Happy belated Mother’s Day?

The Sea Peoples consumed by the Late Bronze Age collapse (or were a catalysts thereof)?

Or just people at sea eaten by krakens? Cause they definitely count.

It’s a dirty joke.

Bum

Cryptosporidium

Cum

Opium

Possum

Scrotum

ScumAll very Yum for the Tum!

Totally reproducible, just with slightly different prompts.

There’s going to be an entire generation of people growing up with this and “learning” this way. It’s like every tech company got together and agreed to kill any chance of smart kids.

Isn’t it the opposite? Kids see so many examples of obviously wrong answers they learn to check everything

How do they know something is obviously wrong when they try to learn it? For “bananum” sure, for anything at school, college though?

The bananum was my point. Maybe as ai improves there won’t be as many of these obviously wrong things, but as it stands virtually any google search gets a shitty wrong answer from ai, and so they see tons of this bad info well before college.

One can hope…

This makes me happy since I won’t lose my job to newbies.

And yet it doesn’t even list ‘Plum’, or did it think ‘Applum’ was just a variation of a plum?

Well, plum originally comes from applum which morphed into a plum so yeah.

And that’s absolutely not true.

I will start using this as a factoid

So will a bunch of LLMs

A lot of folks on the internet don’t get even the most obvious jokes without some sort of sarcasm indicator because some things are really hard to read in text vs in person. LLMs have no idea what the hell sarcasm is and definitely include some in their training, especially if they were trained on any of my old Reddit comments.

Did you know that “factoid” means small piece of trivia? /S

Strawberrum sounds like it’ll be at least 20% abv. I’d like a nice cold glass of that.

Strawberrum? Barely knew 'em!

It’s crazy how bad d AI gets of you make it list names ending with a certain pattern. I wonder why that is.

I’m not an expert, but it has something to do with full words vs partial words. It also can’t play wordle because it doesn’t have a proper concept of individual letters in that way, its trained to only handle full words

they don’t even handle full words, it’s just arbitrary groups of characters (including space and other stuff like apostrophe afaik) that is represented to the software as indexes on a list, it literally has no clue what language even is, it’s a glorified calculator that happens to work on words.

I mean, isn’t any program essentially a glorified calculator?

not really, a basic calculator doesn’t tend to have variables and stuff like that

i say it’s a glorified calculator because it’s just getting input in the form of numbers (again, it has no clue what a language or word is) and spitting back out some numbers that are then reconstructed into words, which is precisely how we use calculators.

That’s interesting, didn’t know

It can’t see what tokens it puts out, you would need additional passes on the output for it to get it right. It’s computationally expensive, so I’m pretty sure that didn’t happen here.

doesn’t it work literally by passing in everything it said to determine what the next word is?

it chunks text up into tokens, so it isn’t processing the words as if they were composed from letters.

With the amount of processing it takes to generate the output, a simple pass over the to-be final output would make sense…

LLMs aren’t really capable of understanding spelling. They’re token prediction machines.

LLMs have three major components: a massive database of “relatedness” (how closely related the meaning of tokens are), a transformer (figuring out which of the previous words have the most contextual meaning), and statistical modeling (the likelihood of the next word, like what your cell phone does.)

LLMs don’t have any capability to understand spelling, unless it’s something it’s been specifically trained on, like “color” vs “colour” which is discussed in many training texts.

"Fruits ending in ‘um’ " or "Australian towns beginning with ‘T’ " aren’t talked about in the training data enough to build a strong enough relatedness database for, so it’s incapable of answering those sorts of questions.

Gemini thought we name food like we name a periodic table

plutonium is food once

A gram of plutonium has enough calories to last you the rest of your life.

Applum bananum jeans, boots with the fur.

AI is truly going to change the world.

30 years from now: “Haven’t they always been called Strawberrums?”

We’ve always been at war with Bananum!

Looks like someone set Google to “Herakles Mode”.

Tomatum… that’s the one

Ok, I feel like there has been more than enough articles to explain that these things don’t understand logic. Seriously. Misunderstanding their capabilities at this point is getting old. It’s time to start making stupid painful.