I was reminded of a particular anecdote I need to get out of my system.

There was a time on a particular fiction series’ fan forum’s IRC channel when I had to convince a very enthusiastic teenage fan that the instructions they found on 4chan to open a portal to a parallel world of that particular work of fantasy fiction was actually a method for synthesizing and inhaling poison gas.

The weird part was that they admitted they knew it was probably just a cruel prank, but were still willing to try just in case it was real. We had to actually find and link articles where the same reaction was exhibited as an actual documented suicide method, if an unreliable one at that, to convince them not to go for it.

By my estimate that person was about high school age, almost certainly over 13 and probably over 15. Mostly their behavior seemed normal for the age, certainly overenthusiastic about the fandom and with obvious signs of teenage ennui, but both of those are typical for someone in their mid-teens. It was just this strange incident of extreme gullibility and self-destructive devotion to a fandom that really stuck with me.

That’s a terrifying story. I’m happy it seems to have worked out ok.

A state of mind that’s unfortunately quite common. See religion for example, magical kingdom ruled by an all-powerful being that lets you in if you behave accordingly. But you have to die first. Yet people gobble it up and even do bad things to other people just for the case that maybe it might be real, even if it likely isn’t.

In this case it sounded like a bunch of amoral assholes on 4chan tried to trick a vulnerable individual to kill themselves. A religion that operated like that would not be able to sustain itself and spread.

I would argue that such things do happen, the cult “Heaven’s Gate” probably being one of the most notorious examples. Thankfully, however, this is not a widespread phenomenon.

Yet, amoral assholes tricking vulnerable people with tales of magic is how religion spreads.

No it isn’t you idiot. But please continue to spout 90s era New Atheism propaganda as if it will change anyone’s minds at this point.

Nit: “New Atheism” was the decade after that. Gary Wolf coined the term in a Wired story from 2006.

Thanks for the clarification. I kinda missed that entire thing online during the time.

of course they’re one of these

how did you imagine this was gonna go?

- you show up in a community you don’t participate in and post low-quality edgelord atheist horseshit that doesn’t have anything to do with what you replied to

- one of the regulars calls you out on your weird bullshit

- you report them for breaking a rule that doesn’t exist and I go “uhhhh ok!” and they’re the one that gets banned for some reason???

The way things are going, KnowYourMeme will be the only reputable journalistic outlet in a few years.

Except far-right chuds routinely snatch up meme pages for culture war related controversies to control the narrative. Most notoriously during the gamergate era, but they also did this with the recent Godot nontroversy.

Didn’t places like buzzfeed or the huffington post also start out as clickbait bs sites and now are reputable journalistic outlets? It could happen!

Okay, quick prediction time:

-

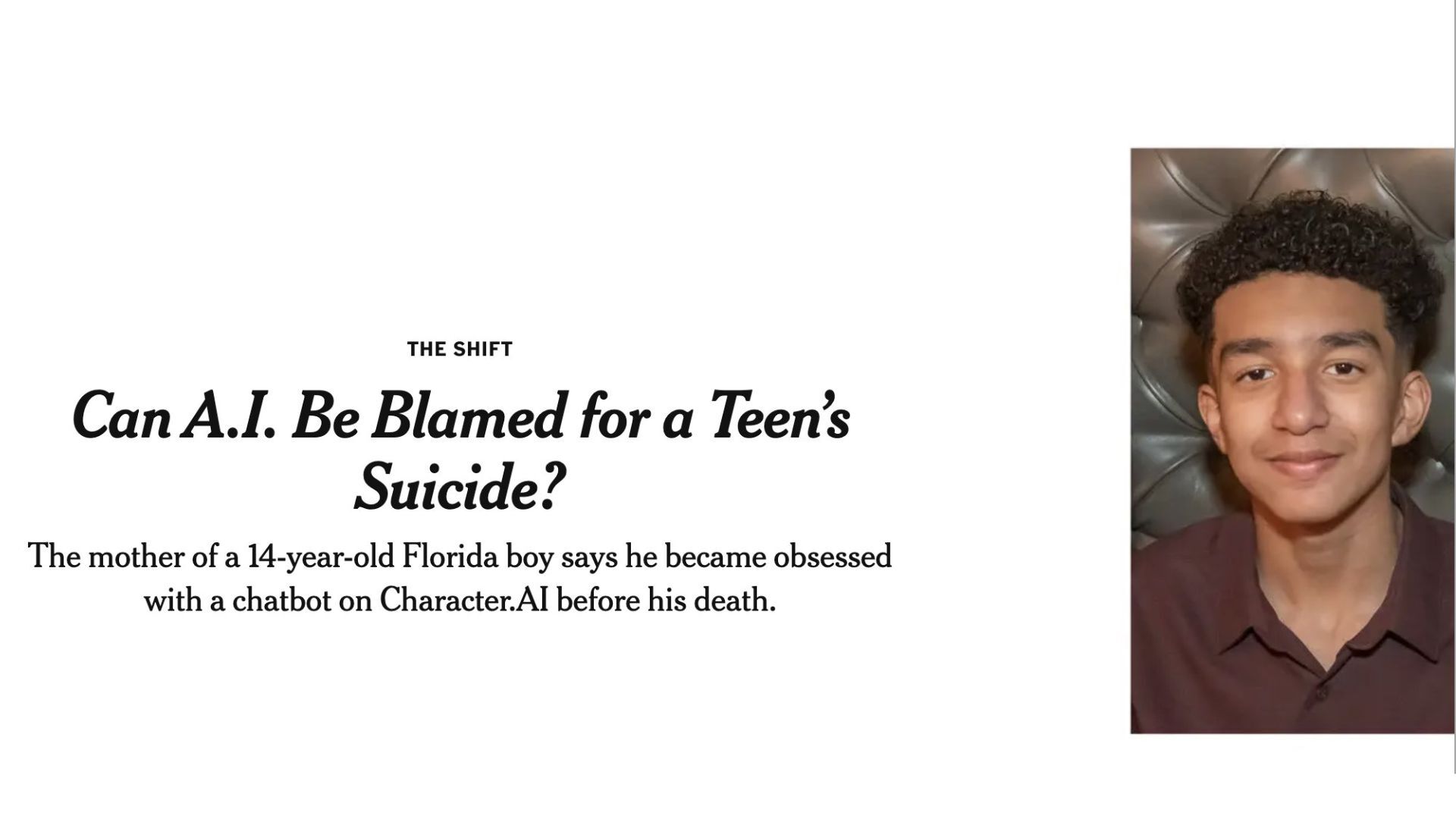

Even if character.ai manages to win the lawsuit, this is probably gonna be the company’s death knell. Even if the fallout of this incident doesn’t lead to heavy regulation coming down on them, the death of one of their users is gonna cause horrific damage to their (already pretty poor AFAIK) reputation.

-

On a larger scale, chatbot apps like Replika and character.ai (if not chatbots in general) are probably gonna go into a serious decline thanks to this - the idea that “our chatbot can potentially kill you” is now firmly planted in the public’s mind, and I suspect their userbase is gonna blow their lid from how heavily the major apps are gonna lock their shit down.

I’m not familiar with Character.ai. Is their business model that they take famous fictional characters, force feed their dialog into LLMs, then offer these LLMs as personalized chatbots?

From the looks of things, that’s how their business model works.

I found an Ars piece:

Asked for comment, Google noted that Character.AI is a separate company in which Google has no ownership stake and denied involvement in developing the chatbots.

However, according to the lawsuit, former Google engineers at Character Technologies “never succeeded in distinguishing themselves from Google in a meaningful way.” Allegedly, the plan all along was to let Shazeer and De Freitas run wild with Character.AI—allegedly at an operating cost of $30 million per month despite low subscriber rates while profiting barely more than a million per month—without impacting the Google brand or sparking antitrust scrutiny.

Updated ‘do no evil’ into ‘if you are going to convince children to kill themselves while doing massive copyright infringement, at least dont hurt the brand’

-