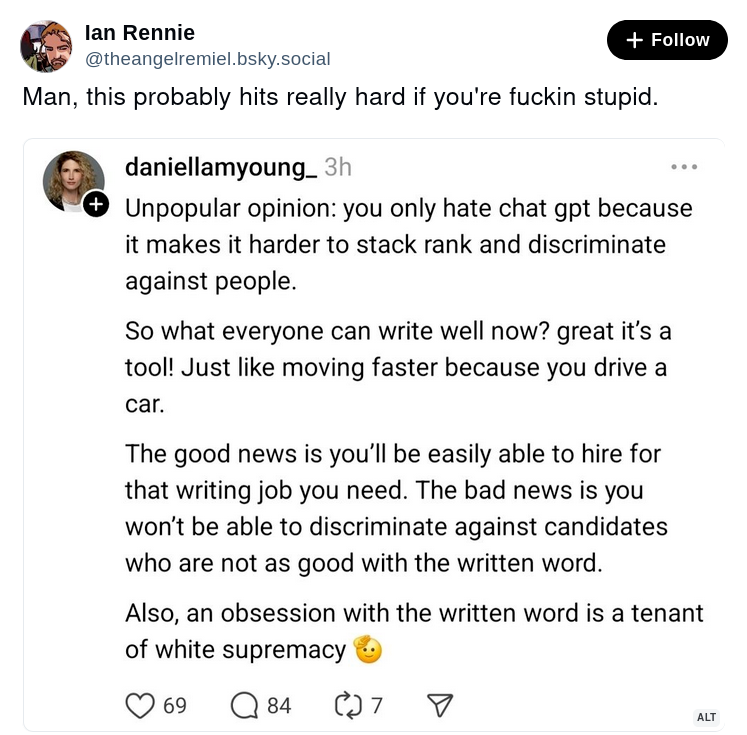

daniellamyoung_3h

Unpopular opinion: you only hate chat gpt because it makes it harder to stack rank and discriminate against people.

So what everyone can write well now? great it’s a tool! Just like moving faster because you drive a car.

The good news is you’ll be easily able to hire for that writing job you need. The bad news is you won’t be able to discriminate against candidates who are not as good with the written word.

Also, an obsession with the written word is a tenant of white supremacy [salute emoji]

Ian Rennie

@theangelremiel.bsky.social

Man, this probably hits really hard if you’re fuckin stupid.

Except that the ability to communicate is a very real skill that’s important for many jobs, and ChatGPT in this case is the equivalent to an advanced version of spelling+grammar check combined with a (sometimes) expert system.

So yeah, if there’s somebody who can actually write a good introduction letter and answer questions on an interview, verses somebody who just manages to get ChatGPT to generate a cover and answer questions quickly: which one is more likely going to be able to communicate well:

Don’t get me wrong, it can even the field for some people in some positions. I know somebody who uses it to generate templates for various questions/situations and then puts in the appropriate details, resulting in a well-formatted communication. It’s quite useful for people who have professional knowledge of a situation but might have lesser writing ability due to being ESL, etc. However, that is always in a situation where there’s time to sanitize the inputs and validate the output, often choosing from and reworking the prompt to get the desired result.

In many cases it’s not going to be available past the application/overview process due to privacy concerns and it’s still a crap-shoot on providing accurate information. We’ve already seen cases of lawyers and other professionals also relying on it for professional info that turns out to be completely fabricated.

LLMs are distinctly different from expert systems.

Expert systems are designed to be perfectly correct in specific domains, but not to communicate.

LLMs are designed to generate confident statements with no regard for correctness.

Don’t make me tap the sign

We don’t correct people when they are wrong. We do other things.

Yeah. I should have said “illusions of” an expert system or something similar. An LLM can for example produce decent working code to meet a given request, but it can also spit out garbage that doesn’t work or has major vulnerabilities. It’s a crap shoot

alert alert we’ve got of one of them on the doorstep

I don’t understand what part of their statement you read as pro-LLM

arguing-from-existence of expert systems (which were the fantasy in the previous wave)

Expert systems actually worked.

https://en.wikipedia.org/wiki/Expert_system

People who know about AI?

you probably don’t know this, but this post is so much funnier than you probably meant it

and it (probably) still won’t save you