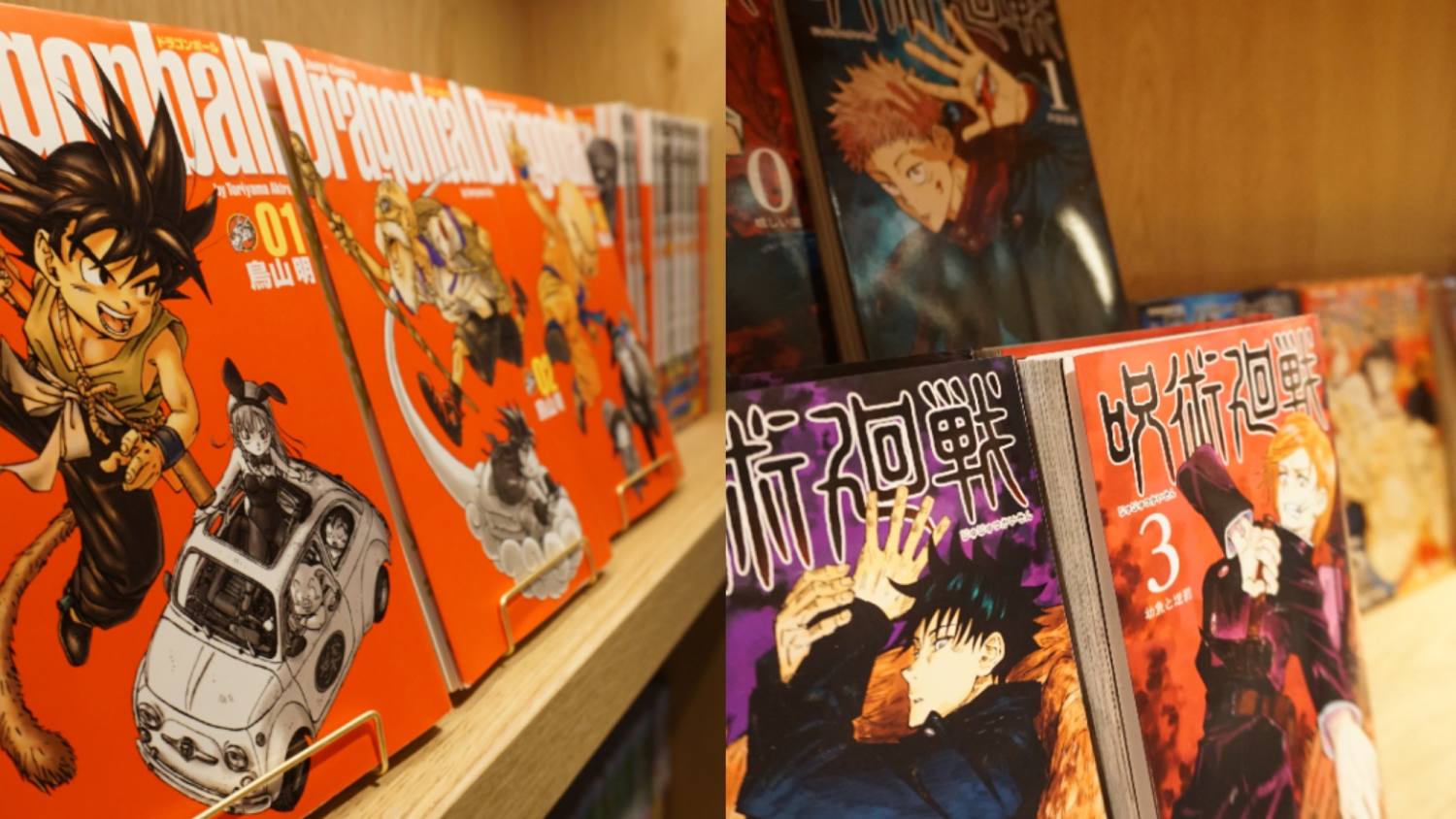

Japanese publishers, including Shueisha and Shogakukan, have invested $4.9 million in Mantra, a startup leveraging AI to accelerate manga translation.

I don’t read many mangas, so I don’t know how good or bad the translations are, but I thought the news was interesting at least.

Not optimistic. How would this handle localization? Or turns of phrase?

Translators are a cultural bridge that far exceeds the two languages.

A good company will use both. AI does 80% and a human does the final check.

I once did that, still was quite bad.

This is actually one of the best use cases of LLM. Indeed there is culture and nuance that may be lost in translation, but so does every other translation. And most of the time, if we know the literary art being translated ahead of time, we can predict a higher use of more nuanced language and adjust accordingly or skim it by a human.

After all, most “AI” is basically feature embedding in higher dimensions. A different language that refers to the same concept should appear close to each other in those dimensions.

This is actually one of the best use cases of LLM.

No, it’s quite simply not. At all.

LLM is an entirely statistical model. To the degree that it strings words together in an order that makes some sort of sense, it’s ONLY because those words are statistically likely to be strung together in that order.

Japanese is an extremely imprecise and contextual language, particularly in its written form. Most kanji have multiple meanings, and often even a notably wide range of meanings, so a purely statistical model is already handicapped in any attempt to translate the intended meaning to another language. And Japanese creative writing, and manga especially, depends heavily on deliberately unusual uses of specific kanji to convey subtle bits of background information, moods, attitudes, hidden meanings or the like, or just as wordplay - puns, alliteration and the like.

And LLMs have no way to recognize any of that nuance. All they can do is regurgitate the most statistically likely string of words.

That will likely provide tolerable results with something that’s written simply and straightforwardly, but as soon as it gets to any of the countless manga that rely on unusual kanji readings and wordplay to convey nuance, it’s going to utterly and completely fail, since it has and can have no actual understanding of the author’s intent, so no basis on which to choose the correct reading of the kanji. All it can do is regurgitate the most statistically likely one, which in those sorts of cases is the one that’s absolutely guaranteed to be wrong.

A word strings together form a sentence which carries meaning yes, that is language. And the order of those words will affect the meaning too, as in any language. LLM then will reflect those statistically significant words together as a feature in higher dimensional space. Now, LLM themselves don’t understand it nor can it reason from those feature. But it can find a distance in those spaces. And if for example a lot of similar kanji and the translation appear enough times, LLM will make the said kanji and translation closer together in the feature space.

The more token size, the more context and more precise the feature will be. You should understand that LLM will not look at a single kanji in isolation, rather it can read from the whole page or book. So a single kanji may be statistically paired with the word “king” or whatever, but with context from the previous token it can become another word. And again, if we know the literary art in advance, we could use the different model for the type of language that is usually used for that. You can have a shonen manga translator for example, or a web novel about isekai models. Both will give the best result for their respective types of art.

I am not saying it will give 100% correct results, but neither does human translation as it will always be a lossy process. But you do need to understand that statistical models aren’t inherently bad at embedding different meanings for the same word. “Ruler” in isolation will be statistically likely to be an object used to measure or a person in charge of a country depending on the model used. But “male ruler” will have a significantly different location in the feature space for the same LLM for the former, or closer for the latter case.

You’re wrong, the person you are replying to is right and I can say that because I’m learning Japanese and what they are saying makes sense

Well, this is just my 2-cent. I think you misunderstand the point I am making. First of all, accept that translation is a lossy process. A translation will always lose meaning one way or another, and without making a full essay about an art piece, you will never get the full picture of the art when translated. Think of it this way, does Haiku in Japanese make sense in English? Maybe. But most likely not. So anyone that wanted to experience the full art must either read an essay about said art or learn the original language. But for story, a translation can at least give you the gist of the event that is happening. Story will inherently have event that have to be conveyed. So a loss of information from subtlety can be tolerated since the highlight is another piece (the string of event).

Secondly, how the model works. GPT is a very bad representation for translation model. Generative Pretrained Transformer, well generate something. I’d argue translation is not a generative task, rather distance calculation task. I think you should read more upon how the current machine learning model works. I suggest 3Blue1Brown channel on youtube as he have a good video on the topic and very recently Welch Labs also made a video comparing it to AlexNet, (arguably) the first breakthrough on computer vision task.

I’ve seen enough “Google translations” to know I want nothing to do with this venture.

Google translation

Not the same thing. Not even close.

It’s a translation done by a machine, making frequent mistakes and failing to understand the wider context that contributes to the intended meaning. It’s the same in every way that matters.

In the context of my work, translations done by LLMs are working great so far and have no problem grasping context. And that is with general models and not specialized ones for the task which I assume this will be. So I will give it a chance instead of outright jumping on the AI hate train.

Grasping context when there’s subtext and allegories is not enough.

For those that are interested in a different perspective on this, not of the publishers, but the translators, Anime Herald actually interviewed several professional translators about this topic. I made a post about it here with some discussion as well.

It’s a good read and the translators are realistic about what is coming:

Zack Morrison (translator): I would say that, like it or not, AI is coming. That genie is not going back in the bottle. And it is improving. The days of Google Translate being a joke are gone. Who knows what AI translation will be like ten years from now? Twenty? Something people need to think about. Hating it is not going to make it go away.

However, at the same time, the companies making use of it are too optimistic about its current capabilities:

Kim Morrissy (translator): Corporations should definitely be more aware of the current limitations of MTL/AI and not see them as a shortcut to reducing labour costs. It’s not just purely a matter of ethics but making people aware that current applications will either see a big drop in quality or require more human labour than they were led to believe.

that genie is not going back to the bottle

IDK, but the anti-GMO movement managed to mostly put back a genie into its bottle. We just need to fight harder.

AI translation has gotten extremely good. It even replaces the text into English in nearly the same style/font!

I actually used my translator on my phone to read a Japanese manga when I was traveling. It needed another level of human revision, but I was able to get most of the story.

If you where reading manga with a translator app why not go with a scanlation release instead

If you want to read manga in Japanese then I suggest learning Japanese

Hell yeah, AI-assisted Duwang

Someone needs to fine-tune a model on that translation.

The last MTL i read was fine but not great, but that already describes many official localizations out there. Even if the mtl is bad it’ll probably get rid of the cheap, low quality localization teams and leave it in the hands of actual talent or passionate fans. Either way i don’t see this a being a bad thing.

On the other hand, the biggest localization scandal (for lack of a better word) didn’t happen at the translation, it happened when failed YA authors were allowed to rewrite the work, which will still probably be a problem. So it might not do very much good either.

On the other hand, the biggest localization scandal (for lack of a better word) didn’t happen at the translation, it happened when failed YA authors were allowed to rewrite the work, which will still probably be a problem. So it might not do very much good either.

I think this is an important point. If this can take the translator’s ego out of the equation it would be a clear win. But then, they would probably insert it back again in the revision stage. My best case scenario would be that with the AI doing the heavy lifting there is enough human workforce going around so that those type of translators who rewrite the story find themself jobless.